The European Commission has taken a decisive step toward implementing Article 50 of the Artificial Intelligence Regulation (AI Act) through a public consultation—now concluded—focused on how to detect and label AI-generated content. The goal is to define, before 2026, guidelines and a Code of Practice to ensure transparency and inform users that they are interacting with an AI system.

On September 4, the European Commission launched a public consultation, which ended last October 9, on the implementation of article 50 of the AI Act. This Regulation, in force since 1 August 2024, establishes transparency obligations that will apply as of 2 August 2026.

What is the aim of the consultation?

To gather views on how to detect and label AI-generated or manipulated content and to ensure that users are informed when they are interacting with AI systems. For this purpose, practical examples are included, such as which marking techniques are more effective (digital watermarks, metadata, etc.), when interaction with an AI system can be considered obvious (and therefore not require notification), or how to disclose the artificial nature of deepfakes used in creative works.

The consultation will help to develop two key instruments: practical guidelines that cover all of article 50 and a Code of Practice focused on generative AI systems. The guidelines will provide legal clarity and the code will set out concrete technical solutions. Together, these instruments are intended to provide a practical roadmap for providers and deployers to comply with the transparency obligations ahead of 2026.

What does Article 50 of the AI Act require?

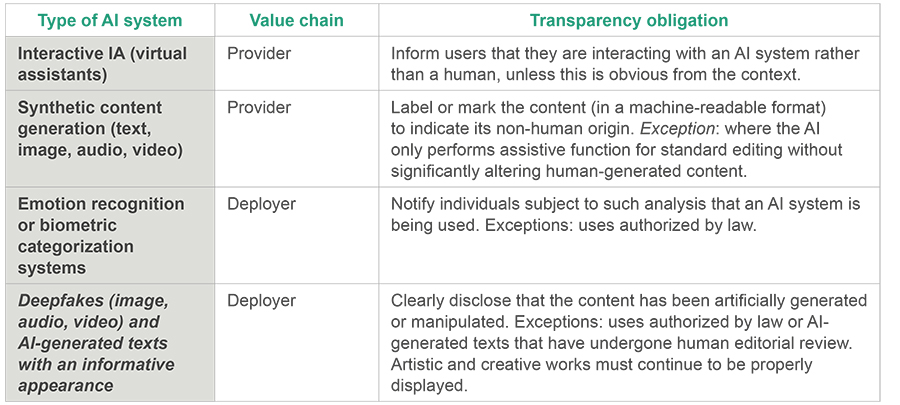

Article 50 sets out several transparency obligations for providers and deployers (users responsible for putting systems into operation) across four categories of AI systems:

Although the AI Act requires that such information be presented in a clear, accessible, and distinguishable manner, it does not specify how this should be achieved—hence the importance of this consultation.

What are its practical implications?

- Technical standards for the industry: the process is expected to lead to technical standards on how to mark AI-generated content. Potential solutions include invisible digital watermarks embedded in images or videos, metadata tags in files, or cryptographic provenance proofs. These solutions will need to be reliable, interoperable—so different tools can read them—and resistant to tampering.

- Greater transparency for users and content creators: for citizens, these measures will mean that in the future they should be able to clearly identify whether a piece of digital content has been generated by AI. For instance, a synthetic photograph or cloned voice recording could carry a detectable signal (such as a digital mark) that allows social media platforms, media outlets, or users themselves to verify its origin. This will help combat disinformation and fraud.

- Obligations for AI providers and deployers: technology companies and developers of generative models (e.g. GPT, DALL·E, Midjourney, etc.) will need to integrate labelling functions into their systems to meet the new transparency requirements. For deployers—such as media outlets using AI to draft articles or studios producing audiovisual content with AI— it will be essential to follow the guidelines to label content accurately and at the appropriate stage. Media organizations will also need to define internal protocols to determine when content should be classified as “human” or “AI-generated,” depending on the level of human oversight involved.

- Impact on the creative sector: in creative industries, the labelling obligations for deepfakes will also have implications. For example, audiovisual productions using AI to digitally recreate actors or settings will have to inform the audience accordingly. The AI Act allows flexibility in artistic or fictional contexts—a disclosure in the credits or description may suffice—so as not to interfere with the artistic experience.

What comes next?

With the public consultation now closed, the European Commission and the AI Office will move to the next phase: analysing the contributions received and beginning the drafting process. The work will start with an inaugural plenary session in early November, followed by a collaborative drafting period extending until June 2026, the target date for finalising the text.

Both instruments will be voluntary, but adherence to them will serve as evidence of compliance with Article 50. In short, the framework is under construction, the rules are taking shape, and the clock is ticking towards August 2026. Will the proposed measures be enough? Will they strike the right balance between innovation and protection? And how will the market respond?

Time will tell — because if there is one thing we’ve learned about AI, it’s that everything can change… fast.

Marta Valero